Lec 13 Symbolic Representation and Reasoning SNNs Based on BrainCog

Hello everyone! In this work introduction, I will introduce the modeling of symbolic representation and reasoning spike neural network inspired by the population coding mechanism. Today’s introduction is mainly divided into 3 parts. Let’s briefly introduce the research background first.

Broadly speaking, the construction of artificial intelligence systems can be divided into two schools: symbolism and connectionism. Symbolism artificial intelligence is a rule-based symbol system and logic system. By letting the machine imitate the process of human beings to complete symbolic reasoning at the abstract level, the machine can complete a series of reasoning tasks such as proving mathematical theorems. Connectionist artificial intelligence is a mathematical model that imitates the neural network of the brain, which can be abstractly regarded as a compound operation of a series of variable parameters. These parameters are fitted by a data-driven method, so that the model can demonstrate the ability to complete cognitive tasks. The human brain is actually capable of symbolic representation and reasoning of complex systems based on neural networks. Inspired by the connection structure and working mechanism of neural circuits in the brain, we tried to construct a brain-inspired symbolic representation and reasoning spiking neural network model and completed the implementation on the Braincog open-source platform.

The introduction in this issue is mainly composed of three sub-modules, namely: symbolic sequence memory and production spiking neural network, commonsense knowledge representation spiking neural network, and causal reasoning spiking neural network.

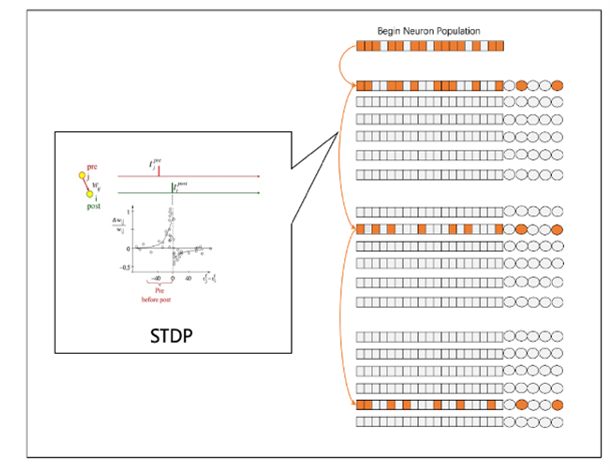

The first part is symbolic sequence memory and production SNN. Inspired by the experimental paradigm of macaques’completing symbolic sequences memory and production, this research completes the division of neuron populations in the spike network through the population coding mechanism which is found in the macaques’ brain and makes different symbols corresponding to different populations of neurons. In the actual experiment process, we give different neuron populations corresponding current stimulation at different times, so that different neuron populations complete successive firing, and follow the STDP mechanism with biological basis to realize the learning of synaptic weights between different neuron populations, eventually enabling the network to remember and production the sequence of symbols. By running the code, you can see the spike trains and the weight distribution of the network after the training is completed.

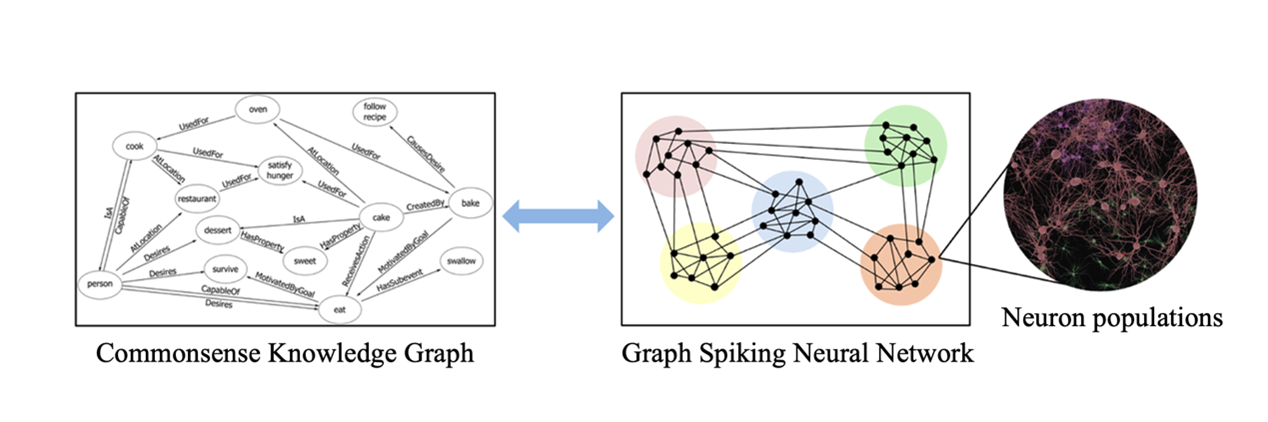

The second part is commonsense knowledge representation SNN. Commonsense knowledge representation is considered by artificial intelligence researchers to be the key to realizing human-level intelligence. This research aims to endow the spiking networks with common sense knowledge and lay a foundation for building a more general spiking network intelligent system in the future. This work represents the nodes and relationships in the knowledge graph as neuron populations in the spiking neural network, and then stimulates different neuron populations in the spiking network according to the topological structure between nodes and relationships in the knowledge graph, making it Sequential firing, and the learning of triplet knowledge is completed according to the STDP mechanism, so that commonsense knowledge is encoded into the spiking neural network. Now let’s look at the code. In the implementation process, we built CKRNet, which also inherited from Braincog’s BrainArea class, and called the STDP method in the connection class, and finally realized the expected function.

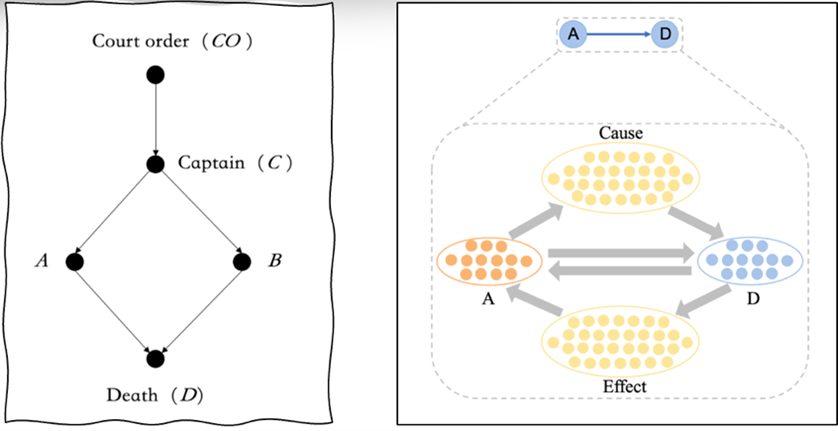

The third part is causal reasoning SNN. There is a huge gap between the data-driven deep learning artificial intelligence system and the way humans’ cognitive process. One of the key differences is that humans are always good at asking why to explore the differences between different things in the environment with insights from a causal perspective. Inspired by the proposal of causal graph, we propose a causal reasoning spiking neural network. In the future, we hope that the spiking network can interact with the environment and build a dynamic causal graph, but for the first step, we still choose to fix the causal graph, and represent the different nodes in the causal graph and the relationship as spiking neuron populations. The learning of the causal graph is then accomplished through stimulus and STDP learning rules consistent with previous work. Now let’s look at the code. In the actual coding, we built CRNet, which inherited from Braincog’s BrainArea class, and called the STDP method in the connection class, finally we completed the learning of the causal graph and realized the expected function. By running the code, you can see the spike trains and the weight distribution of the network after the training is completed.

https://github.com/BrainCog-X/Brain-Cog/tree/main/examples/Knowledge_Representation_and_Reasoning/SPSNN;

https://github.com/BrainCog-X/Brain-Cog/tree/main/examples/Knowledge_Representation_and_Reasoning/CKRGSNN;

https://github.com/BrainCog-X/Brain-Cog/tree/main/examples/Knowledge_Representation_and_Reasoning/CRSNN.

If you are interested in our work, welcome to cite our articles, and welcome to contact us by email!

@article{fang2021spsnn,

title = {Brain inspired sequences production by spiking neural networks with reward-modulated stdp},

author = {Fang, Hongjian and Zeng, Yi and Zhao, Feifei},

journal = {Frontiers in Computational Neuroscience},

volume = {15},

pages = {8},

year = {2021},

publisher = {Frontiers}

}

@article{KRRfang2022,

title = {Brain-inspired Graph Spiking Neural Networks for Commonsense Knowledge Representation and Reasoning},

author = { Fang, Hongjian and Zeng, Yi and Tang, Jianbo and Wang, Yuwei and Liang, Yao and Liu, Xin},

journal = {arXiv preprint arXiv:2207.05561},

year = {2022}

}

@inproceedings{fang2021CRSNN,

title={A Brain-Inspired Causal Reasoning Model Based on Spiking Neural Networks},

author={Fang, Hongjian and Zeng, Yi},

booktitle={2021 International Joint Conference on Neural Networks (IJCNN)},

pages={1--5},

year={2021},

organization={IEEE}

}

@misc{https://doi.org/10.48550/arxiv.2207.08533,

doi = {10.48550/ARXIV.2207.08533},

url = {https://arxiv.org/abs/2207.08533},

author = {Zeng, Yi and Zhao, Dongcheng and Zhao, Feifei and Shen, Guobin and Dong, Yiting and Lu, Enmeng and Zhang, Qian and Sun, Yinqian and Liang, Qian and Zhao, Yuxuan and Zhao, Zhuoya and Fang, Hongjian and Wang, Yuwei and Li, Yang and Liu, Xin and Du, Chengcheng and Kong, Qingqun and Ruan, Zizhe and Bi, Weida},

title = {BrainCog: A Spiking Neural Network based Brain-inspired Cognitive Intelligence Engine for Brain-inspired AI and Brain Simulation},

publisher = {arXiv},

year = {2022},

}