Lec 8 Converting ANNs to SNNs with BrainCog

As we all know, the current spiking neural network training algorithm can be divided into three parts. Biologically plausible methods such as STDP and surrogate gradient methods such as STBP have poor performance and are difficult to compare with the performance of the artificial neural network. By converting the parameters of trained ANN to SNN, the conversion method can take advantage of both back propagation and SNN to achieve performance comparable to ANN with lower power consumption.

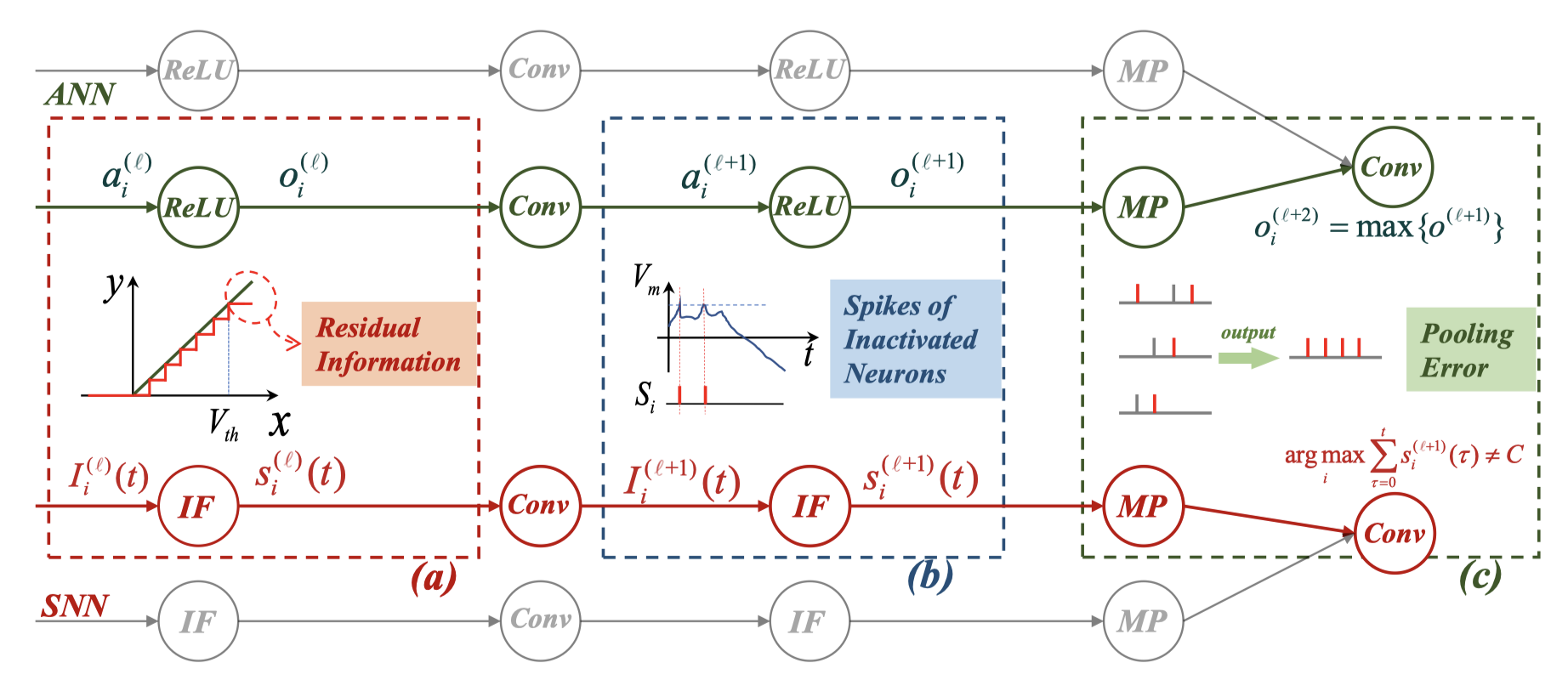

However, ANN-SNN conversions typically suffer from severe performance losses and time delays. Our work provides an in-depth analysis of the errors that affect the performance of the current conversion framework, including residual information, spikes of inactivated neurons, and pooling errors. Residual information, strictly speaking, includes clipping and floor errors. Assume that the total simulation time is T, and the maximum firing rate of the neuron is 1 when the neuron emits spikes at each time step. When the neuron fires only one spike, the firing rate is 1/T. Residual information in neurons that cannot be delivered by spikes will affect the conversion to a certain extent.

Some current works achieve statistically optimal solutions by comparing the properties of ReLU in ANN with those of IF neurons in SNN by migrating the ReLU function, while similar to ReLU6 limits the upper limit of activation values in ANN, which addresses to some extent the influence of residual information on the conversion.

Inspired by the neuron burst mechanism, we introduce burst neurons into converted SNN. It can emit more spikes between two simulation steps. Then the neuron of the next layer processes these spikes in the next time step. Burst spikes can make the residual information in the membrane potential released between simulation steps. In contrast, the regular spikes can only release one spike at most at a limited simulation step.

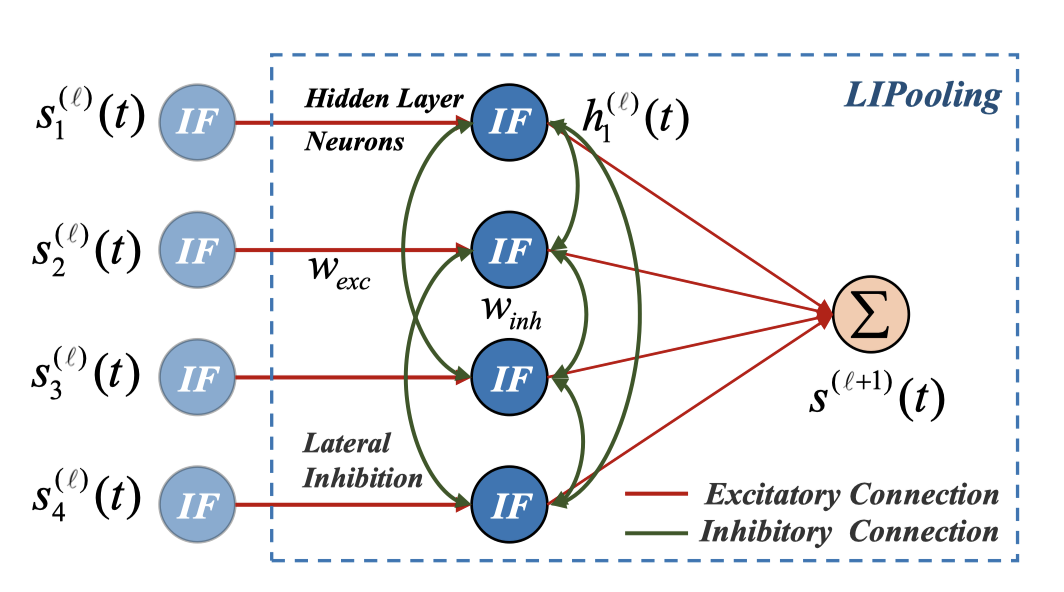

As is often the case when converting the MaxPooling layer, as shown on the left, SNN usually produces more output than expected. Inspired by the mechanism of lateral inhibition, we proposed lateral inhibition pooling. We use mutual inhibition of neurons’ spikes to control the output neurons’ firing rate to be expected value, and the side inhibition effect is 1.

In addition, neurons with input less than 0 in ANN will not be activated. While in SNN, these neurons should not fire. However, due to the discrete effect of spikes, neurons can release spikes once they accumulate enough membrane potential in a short time, and this effect will not disappear with the increase of simulation time. We find, however, that since the classification problem only focuses on the maximum output, we do not pay much attention to the SIN problem, but the effect on conversion is worth exploring. One possible solution is to monitor the problem and correct it using negative spike, as described in the article below.

We performed ablation experiments on the cifar100 dataset using VGG16 and ResNet20, and our method can greatly accelerate the inference speed and improve the best performance

We also compared our work with other conversion methods. Our methods could achieve almost lossless conversion and the performance exceeds that of other conversion methods.

In Braincog, we provide a base converter that is capable of implementing such mainstream rate-based conversion methods, such as soft_rest, p-norm, channel_norm, conv–BN layer fusion, LIPooling, burst spike, and so on. The converter parameters are first initialized, and p-norm is used by default. the converter is divided into three main steps: obtaining the normalization parameters, fusing the convolutional BN layers, and replacing the ReLU layers with IF neurons or other desired neurons, including of course the pooling layers. braincog provides a large number of commonly used neuron types in SNNs in node. It should be noted that when the ReLU function of our ANN has been limited to an upper limit, the normalization parameters can be entered directly or this step can be ignored.

class Convertor(nn.Module):

def__init__(self,

dataloader,

device=None,

p=0.9995,

channelnorm=False,

lipool=True,

gamma=1,

soft_mode=True,

merge=True,

batch_num=1,

)

BrainCog also provides an example of the conversion. First, we need to train a good ANN, and then the converted spiking neural network can be inferred by Braincog by simply loading the weights and instantiating the converter.

For details, you can refer to the following paper and the Braincog code base. Thank you all.

source code

https://github.com/BrainCog-X/Brain-Cog

https://github.com/Brain-Cog-Lab/Conversion_Burst

Reference

[1] Yang Li and Yi Zeng. Efficient and accurate conversion of spiking neural network with burst spikes. In Lud De Raedt, editor, Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI-22, pages 2485–2491. 7 2022.

[2] Zeng Y, Zhao D, Zhao F, et al. BrainCog: A Spiking Neural Network based Brain-inspired Cognitive Intelligence Engine for Brain-inspired AI and Brain Simulation[J]. arXiv preprint arXiv:2207.08533, 2022.