Lec 9 SNNs with Global Feedback Connections Based on BrainCog

The training of spiking neural networks has been one of the challenges for researchers. In addition to backpropagation-based algorithms, researchers want to update the weights of neural networks by by drawing on learning in the brain. It is well known that the brain uses the rules of synaptic plasticity to regulate the strength of connections between neurons, and most of the current approaches to modeling synaptic plasticity, such as spike-timing-dependent plasticity, long-term plasticity, and short-term plasticity, are based on the rules of local unsupervised optimization. That is, when we apply these local optimization rules to the optimization of the network, we do not provide a supervised signal to the network, which leads to difficulties in convergence of the network when training deep SNNs.

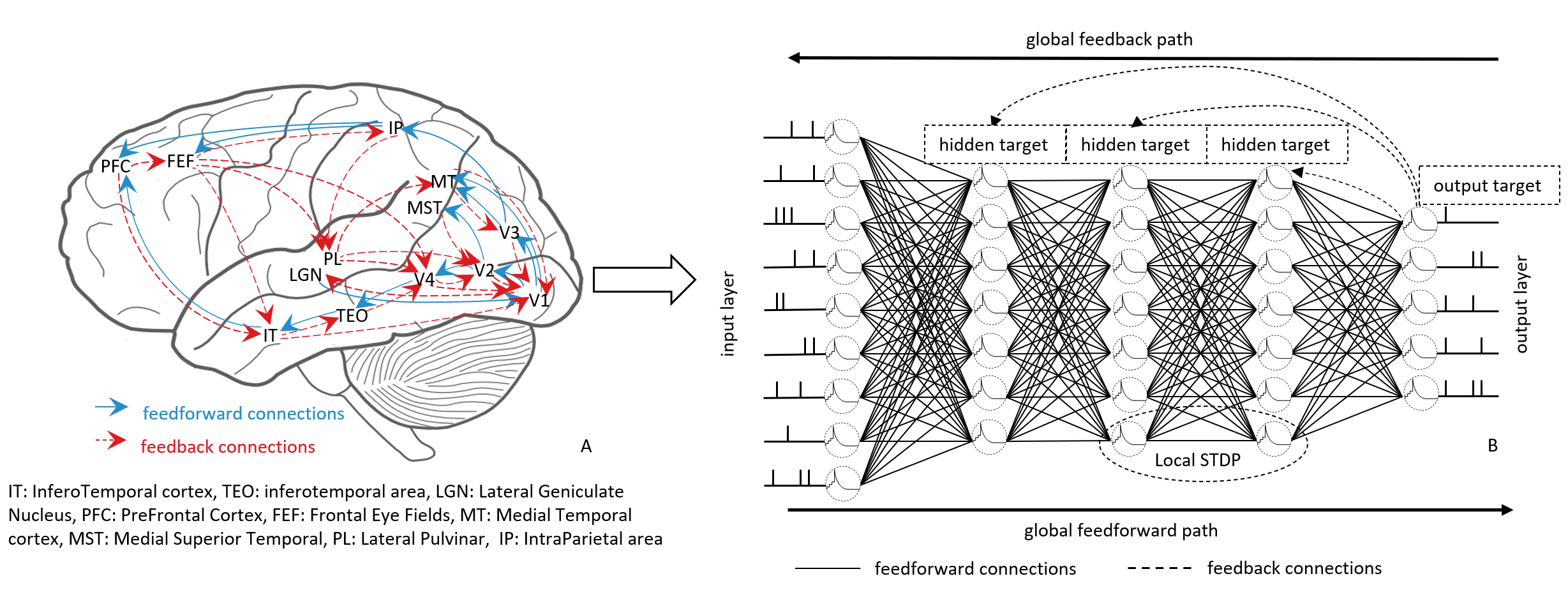

Anatomical and physiological evidence suggests that in addition to feedforward connections, there are a large number of feedback connections in the brain. Learning and inference in the brain are based on the interplay of feedforward connections as well as feedback connections. Large number of feedback connections are connected in reverse order to transfer global information from higher cortical areas to primary cortical areas during perception and learning. Feedback connections from higher brain regions compute predictions of representations in lower brain regions. The difference between feedforward and feedback neuron states and local synaptic plasticity mechanisms are combined to enable the update for the weights.

First, we calculate the state of the network using feedforward connections. Subsequently, we calculate the difference between the predicted and true values and directly calculate the target of the penultimate layer. Finally, using the feedback connections, we compute the targets of the different hidden layers of the network and update the weights between the different neighboring layers in the network using the local synaptic plasticity rule. The feedback connection is directly from the output layer to the hidden layer, that is, the whole network can update the parameters of all hidden layers at the same time and does not introduce additional computational effort since the feedback layer does not need to update the weights.

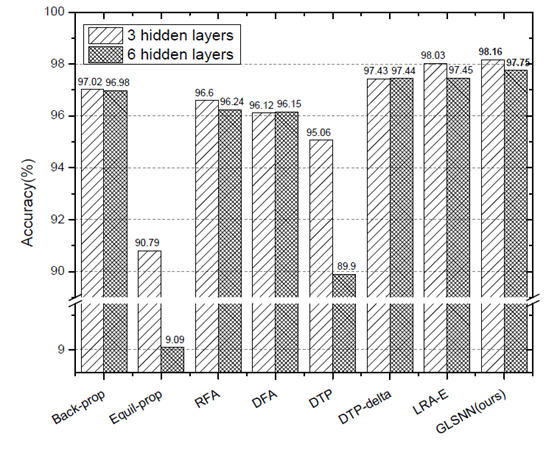

We implement our algorithm using the basic components in BrainCog, which we will describe in detail later. To demonstrate that our algorithm still performs well after deepening the number of layers in the network, we test the accuracy of networks with different hidden layers, with the number of neurons set to 256. As shown in the figure, for the equil-prop, when the number of network layers deepens, the accuracy of the network decreases from 90% to 9%, and it becomes difficult to converge. This is because the equil-prop algorithm is similar to the Contrastive Hebbian learning in that it defines two stages in the learning process and uses the minimization energy function to carry out implicit feedforward and feedback propagation. When the network layer is deep, it is difficult for the network to converge to a stable state. Compared with other stable algorithms, the performance of our proposed algorithm is improved no matter at 3 hidden layers or 6 hidden layers, which indicates the stability and superiority of our algorithm.

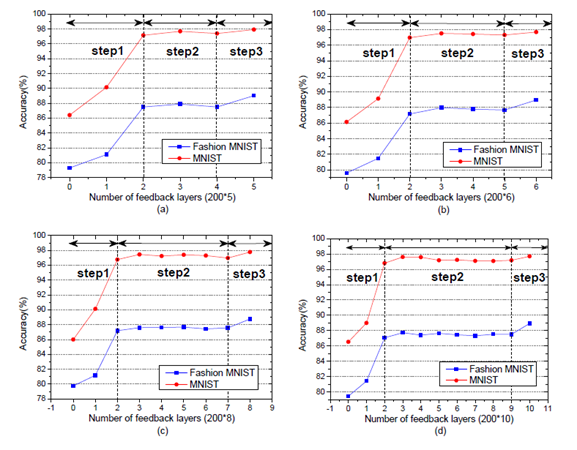

To investigate the effect of the feeback layers on the performance of the network, we created networks with 7, 8, 10 and 12 layers. First, we remove all the feedback layers from the network, which means that only the weights of the last two layers can be updated. Then we gradually add feedback layers to the network. As the number of feedback layers increases, the performance of the network gradually improve. After all the feedback layers are added, the GLSNN ahieves the optimal performance. It fully illustrates that feedback layers play a very important role in the process of perception and learning.

Based on BrainCog framework, we implement the corresponding algorithm in the glsnn file under the model_zoo folder. We used the basic component LIF neurons in BrainCog. As the code shows, our algorithm is divided into two phases, the forward phase and the feedback phase.

class BaseGLSNN(BaseModule):

def __init__(self, input_size=784, hidden_sizes=[800]*3, output_size=10):

# define forward and feedback layer

network_sizes = [input_size] + hidden_sizes + [output_size]

feedforward = []

for ind in range(len(network_sizes) - 1):

feedforward.append(

BaseLinearModule(in_features=network_sizes[ind], out_features=network_sizes[ind + 1], node=LIFNode))

self.ff = nn.ModuleList(feedforward)

feedback = []

for ind in range(1, len(network_sizes) - 2):

feedback.append(nn.Linear(network_sizes[-1], network_sizes[ind]))

self.fb = nn.ModuleList(feedback)

In the forward stage, LIF neurons are used to carry out information propagation, and the fire rate of neurons is used as the state of forward propagation. We use feedback connections to update the targets of hidden layers. Finally, combined with the states of feedforward and feedback, the weights are updated using the local synaptic plasticity rule. The specific code details are available:

https:/github.com/BrainCog-X/Brain-Cog/tree/main/examples/Perception_and_Learning/img_cls/glsnn

https:/github.com/BrainCog-X/Brain-Cog/blob/main/braincog/model_zoo/glsnn.py

We also provide detailed training scripts, if you are interested in our work, feel free to cite our paper and contact us via email if you have any questions.

@article{zhao2020glsnn,

title={GLSNN: A Multi-Layer Spiking Neural Network Based on Global Feedback Alignment and Local STDP Plasticity},

author={Zhao, Dongcheng and Zeng, Yi and Zhang, Tielin and Shi, Mengting and Zhao, Feifei},

journal={Frontiers in Computational Neuroscience},

volume={14},

year={2020},

publisher={Frontiers Media SA}

}

@misc{https://doi.org/10.48550/arxiv.2207.08533,

doi = {10.48550/ARXIV.2207.08533},

url = {https://arxiv.org/abs/2207.08533},

author = {Zeng, Yi and Zhao, Dongcheng and Zhao, Feifei and Shen, Guobin and Dong, Yiting and Lu, Enmeng and Zhang, Qian and Sun, Yinqian and Liang, Qian and Zhao, Yuxuan and Zhao, Zhuoya and Fang, Hongjian and Wang, Yuwei and Li, Yang and Liu, Xin and Du, Chengcheng and Kong, Qingqun and Ruan, Zizhe and Bi, Weida},

title = {BrainCog: A Spiking Neural Network based Brain-inspired Cognitive Intelligence Engine for Brain-inspired AI and Brain Simulation},

publisher = {arXiv},

year = {2022},

}