Lec 7 Quantum Superposition State Inspired Encoding With BrainCog

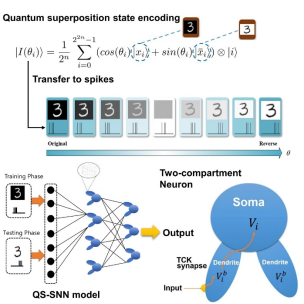

Spatio-temporal Encoding Inspired by Quantum Superposition State

This document introduces the spike sequence spatio-temporal encoding (QS Encoding) module inspired by the quantum superposition state provided by BrainCog. The neuron spike sequences have two encoding methods, frequency and phase. The spatio-temporal encoding of the spike sequence introduced in this article combines both to encode information using the two dimensions of frequency and phase. BrainCog provides the base class of this coding method, and it is applied to the task of spiking neural network to process background reversed images.

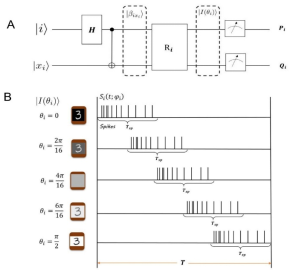

Drawing on traditional quantum image coding, we use entangled qubits to encode the original image information and the background reversed picture information, and the images encoded into quantum states are further converted into neuron spike signals with different firing frequencies and phases, which are input into SNN for identification and classification.

The complex information in a quantum encoding is similar to the type of signal in an SNN. Neuron spikes can encode spatio-temporal information at a specific rate and phase, which can be used to represent quantum information. Neuronal spikes are characterized by special spatio-temporal dimensions, with the same shape but large differences in firing frequency and phase. We characterize the quantum state image using a sequence of spikes.

In the encoder module of Braincog, we provide the base class implementation of QS encoding:

class QSEncoder:

def __init__(self,

lambda_max,

steps,

sig_len,

shift=False,

noise=None,

noise_rate=None,

eps=1e-6

) -> None:

def __call__(self, image, image_delta, image_ori, image_ori_delta):

if self._noise:

signals = self.noise_trans(image, image_ori, image_ori_delta)

elif self._shift:

signals = self.shift_trans(image, image_delta, image_ori, image_ori_delta)

else:

signals = np.zeros((self.steps, image.shape[0]))

signal_possion = np.random.poisson(image, (self._sig_len, image.shape[0]))

signals[:self._sig_len] = signal_possion[:]

Using Spike Sequence Spatio-temporal Enoding method in QS-SNN model

Using the QS encoding module packaged by BrainCog to build a multi-compartment spiking neural network (MC-SNN), in which neurons have two computing units, dendrites and soma, which are more biologically interpretable than LIF neurons.

Implementation of MC-SNN in BrainCog:

class Net(nn.Module):

def __init__(self, net_size):

super().__init__()

self.input_size = net_size[0]

self.hidden_layers = nn.ModuleList([Hidden_layer(net_size[i],

net_size[i + 1], net_size[-1]) for i in range(len(net_size) - 2)])

self.out_layer = Output_layer(net_size[-2], net_size[-1])

self.kernel = torch.from_numpy(kernel[:, np.newaxis]).cuda()

self.qs_code = QSEncoder

After the background reversed image is encoded by the quantum superposition state pulse sequence, it is input into MC-SNN for classification:

def routine(self,

input_,

input_delta,

image_ori,

image_ori_delta,

shift,

label,

test=False,

noise=False,

noise_rate=None):

encoder = self.qs_code(lambda_max, STEPS, SLEN, shift, noise, noise_rate)

input_ = encoder(input_, input_delta, image_ori, image_ori_delta)

input_ = torch.from_numpy(input_).to(self.kernel.device)

psp = torch.mm(input_, self.kernel).abs().float()

for i in range(STEPS):

self.update_state(psp, label, test=test)

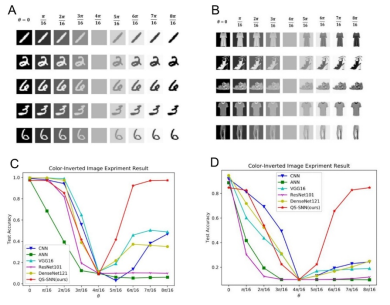

We constructed a 3-layer QS-SNN model with a hidden layer of 500 neurons and 10 output neurons. The ANN networks used for comparison have the same structure. A simple CNN structure with three convolutional layers and two pooling operations is used to determine the ability of different feature extraction methods to deal with inverted background images. We also tested the effect of deep network structures like VGG16, ResNet101, and DenseNet121 on recognizing reversed background images.

The results show that traditional fully-connected ANNs and convolutional models are difficult to handle large changes in image properties, such as background inversion, even if the spatial characteristics of the image remain unchanged. Compared with other models, the QS-SNN model can keep the recognition performance basically unchanged when recognizing the background reversed image.

Code Link:

https://github.com/BrainCog-X/Brain-Cog/tree/main/examples/Perception_and_Learning/QSNN

Related papers:

@article{SUN2021102880,

title = {Quantum superposition inspired spiking neural network},

journal = {iScience},

volume = {24},

number = {8},

pages = {102880},

year = {2021},

issn = {2589-0042},

doi = {https://doi.org/10.1016/j.isci.2021.102880},

url = {https://www.sciencedirect.com/science/article/pii/S2589004221008488},

author = {Yinqian Sun and Yi Zeng and Tielin Zhang},

}

@misc{https://doi.org/10.48550/arxiv.2207.08533,

doi = {10.48550/ARXIV.2207.08533},

url = {https://arxiv.org/abs/2207.08533},

author = {Zeng, Yi and Zhao, Dongcheng and Zhao, Feifei and Shen, Guobin and Dong, Yiting and Lu, Enmeng and Zhang, Qian and Sun, Yinqian and Liang, Qian and Zhao, Yuxuan and Zhao, Zhuoya and Fang, Hongjian and Wang, Yuwei and Li, Yang and Liu, Xin and Du, Chengcheng and Kong, Qingqun and Ruan, Zizhe and Bi, Weida},

title = {BrainCog: A Spiking Neural Network based Brain-inspired Cognitive Intelligence Engine for Brain-inspired AI and Brain Simulation},

publisher = {arXiv},

year = {2022},

}