Lec 16 Brain-inspired Bodily Self-perception Model Based on BrainCog

Hello, this issue shares with you how to build a bodily self-perception mode with BrainCog to achieve motor-visual associative learning, so that the robot can pass the mirror test.

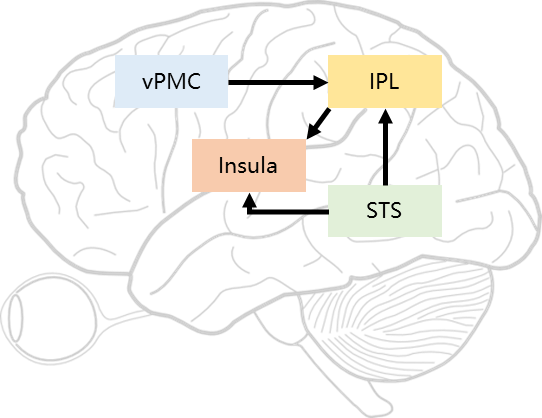

Combining the results of brain science and neuroscience in the field of self-perception, we proposed a brain-inspired bodily self-perception model. Cognitive psychology experiments have shown that the IPL is activated in response to the movements produced by one’s own face and body, and the Insula is activated in both the bodily-ownership and self-recognition experiments. The brain-inspired bodily self-perception model is built around these two areas, in addition, it also includes vPMC and STS. vPMC is used to generate self-motion information, and STS is used to detect motion information in visual field.

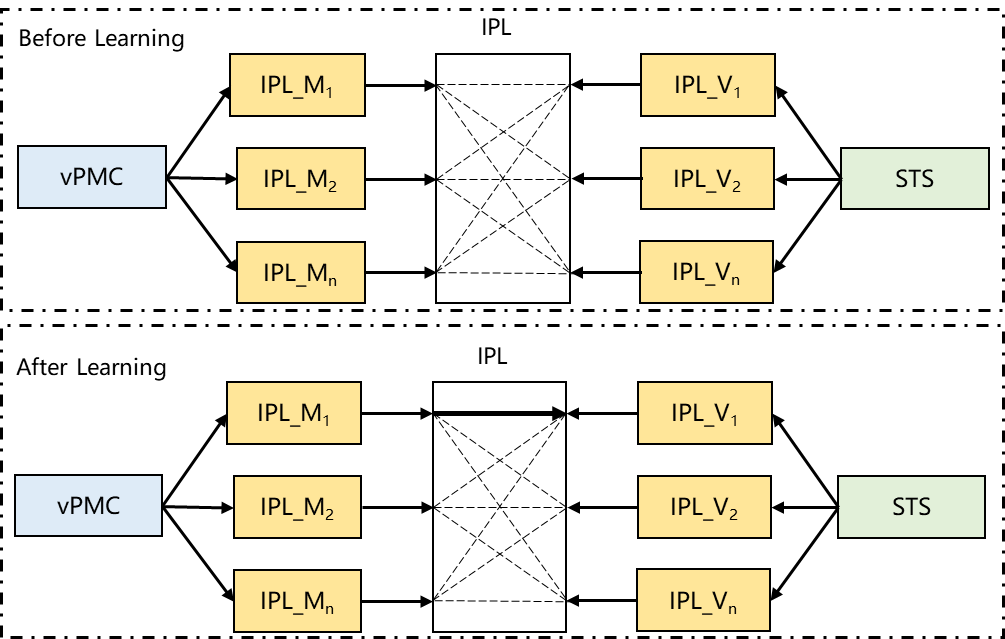

The IPL realizes the motor-visual associative learning in the computational model. The IPL contains two areas, the IPLM and the IPLV. IPLM is the area where the motor perception neurons in IPL are located, and IPLV is the area where the visual perception neurons in IPL are located. The former receives self-motion information from vPMC, while the latter receives visual motion information from STS.

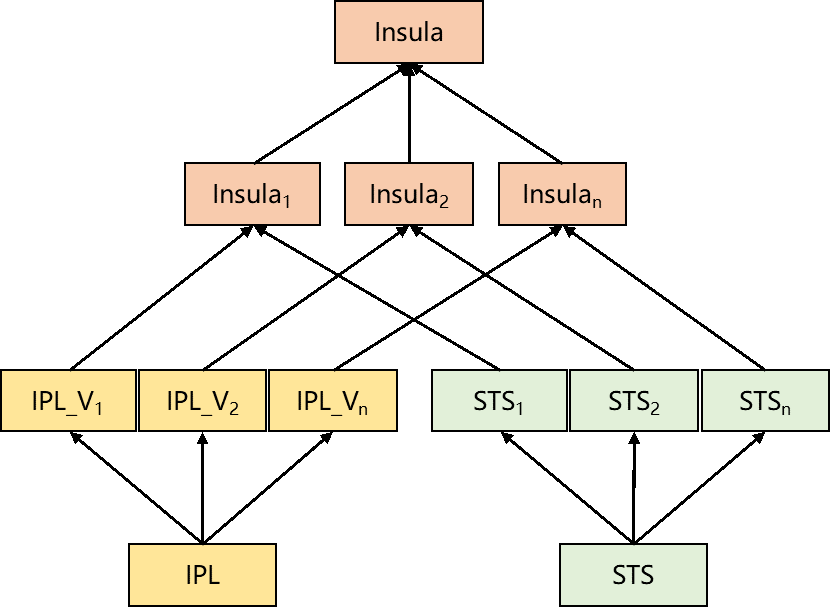

The Insula realizes self-representation in the computational model. When the agent detects that the motion in the visual field is generated by itself, the Insula is activated. The insula receives information from IPLV and STS. IPLV outputs the visual feedback information predicted according to its own motion information, while STS outputs the motion information detected by vision. If both activate the same neuron in the Insula, the Insula is activated.

Based on the STDP and the discharge time difference between IPLM and IPLV, the model realizes motor-visual associative learning.

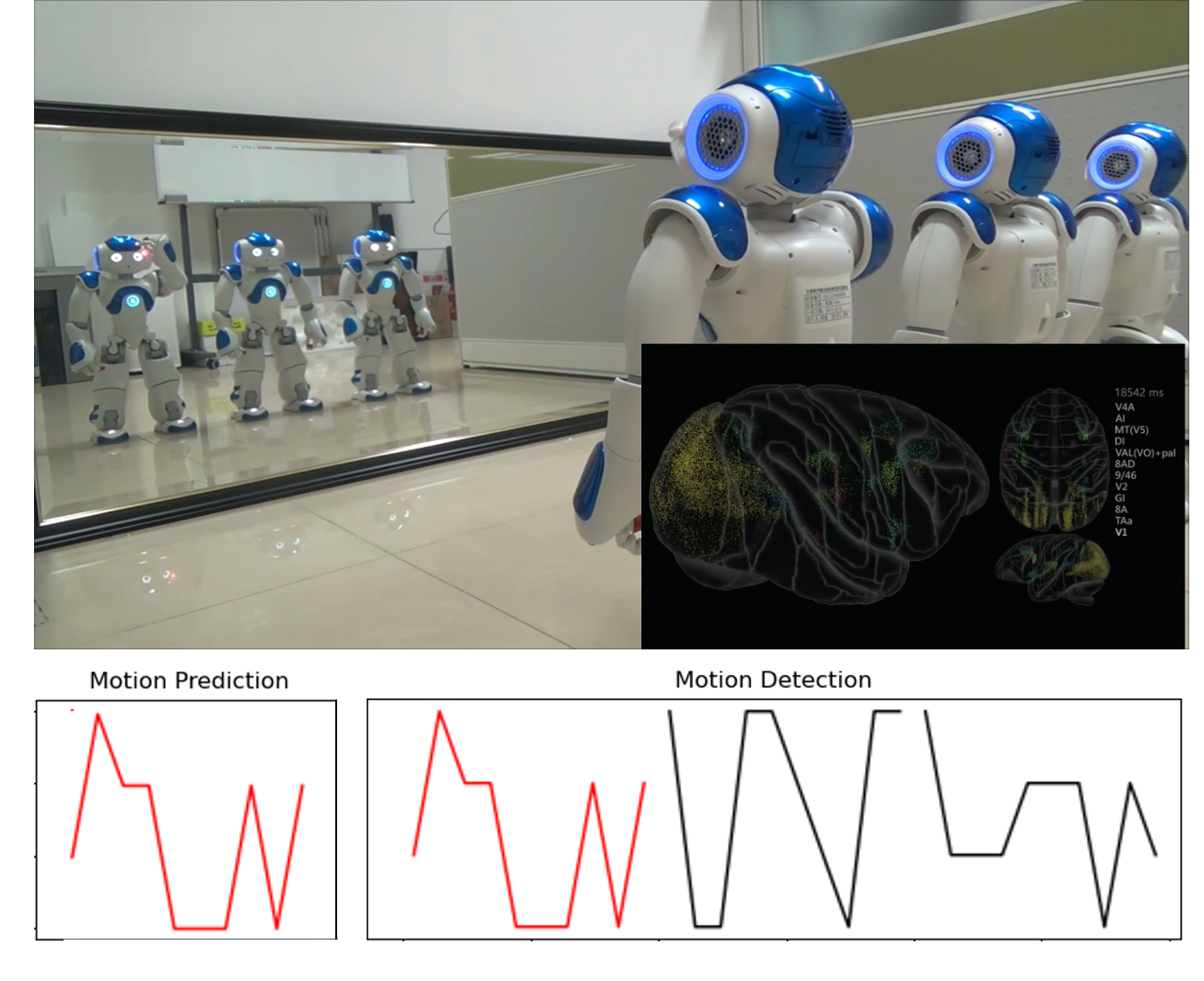

Finally, a multi-robots mirror test is simulated. After training, the robot outputs the predicted visual motion track in the IPLV based on the self-motion track output by the vPMC. Among the three visual motion tracks detected by STS, the leftmost track is consistent with the prediction in IPLV, which activated the Insula, indicating that the robot believes that the leftmost motion track is generated by itself.

The computational model is constructed by BrainCog, including IPL-SNN for motor-visual associative learning and Insula-SNN for self-representation. First, set the number of neurons in each brain area, then call the connection under base to build the connection between brain areas, and then build the brain areas through IPL and Insula under Brainare. IPL-SNN is built by Izhikevich neuron model in node, and uses STDP in learning rule to complete learning. The same goes for Insula-SNN.

After building the whole framework, the network can be trained and tested. In the training process, the vPMC outputs the motion angle generated by itself, and STS 1, STS 2, and STS 3 outputs the motion angle detected by vision. This information is fed into IPL-SNN to realize the motor-visual associative learning.

During the test, IPLV outputs visual feedback results predicted based on its own movement. STS outputs the detected visual motion tracks STS1, STS2, and STS3. This information is fed into Insula, and the visual track that has activated Insula most times is considered to be generated by its own motion.

The code for details:

https://github.com/BrainCog-X/Brain-Cog/tree/main/examples/Social_Cognition/mirror_test

Related work is welcome to cite, if you have any questions, please feel free to contact us by email.

@article{zeng2018toward,

title={Toward robot self-consciousness (ii): brain-inspired robot bodily self model for self-recognition},

author={Zeng, Yi and Zhao, Yuxuan and Bai, Jun and Xu, Bo},

journal={Cognitive Computation},

volume={10},

number={2},

pages={307--320},

year={2018},

publisher={Springer}

}

@article{zeng2022braincog,

doi = {10.48550/ARXIV.2207.08533},

url = {https://arxiv.org/abs/2207.08533},

author = {Zeng, Yi and Zhao, Dongcheng and Zhao, Feifei and Shen, Guobin and Dong, Yiting and Lu, Enmeng and Zhang, Qian and Sun, Yinqian and Liang, Qian and Zhao, Yuxuan and Zhao, Zhuoya and Fang, Hongjian and Wang, Yuwei and Li, Yang and Liu, Xin and Du, Chengcheng and Kong, Qingqun and Ruan, Zizhe and Bi, Weida},

title = {BrainCog: A Spiking Neural Network based Brain-inspired Cognitive Intelligence Engine for Brain-inspired AI and Brain Simulation},

publisher = {arXiv},

year = {2022},

}