Lec 14 Multisensory Integration Based on BrainCog

Concept learning, or the ability to recognize commonalities and contrasts across a group of linked events in order to generate structured knowledge, is a crucial component of cognition. Multisensory integration benefits concept learning and plays an important role in semantic processing.

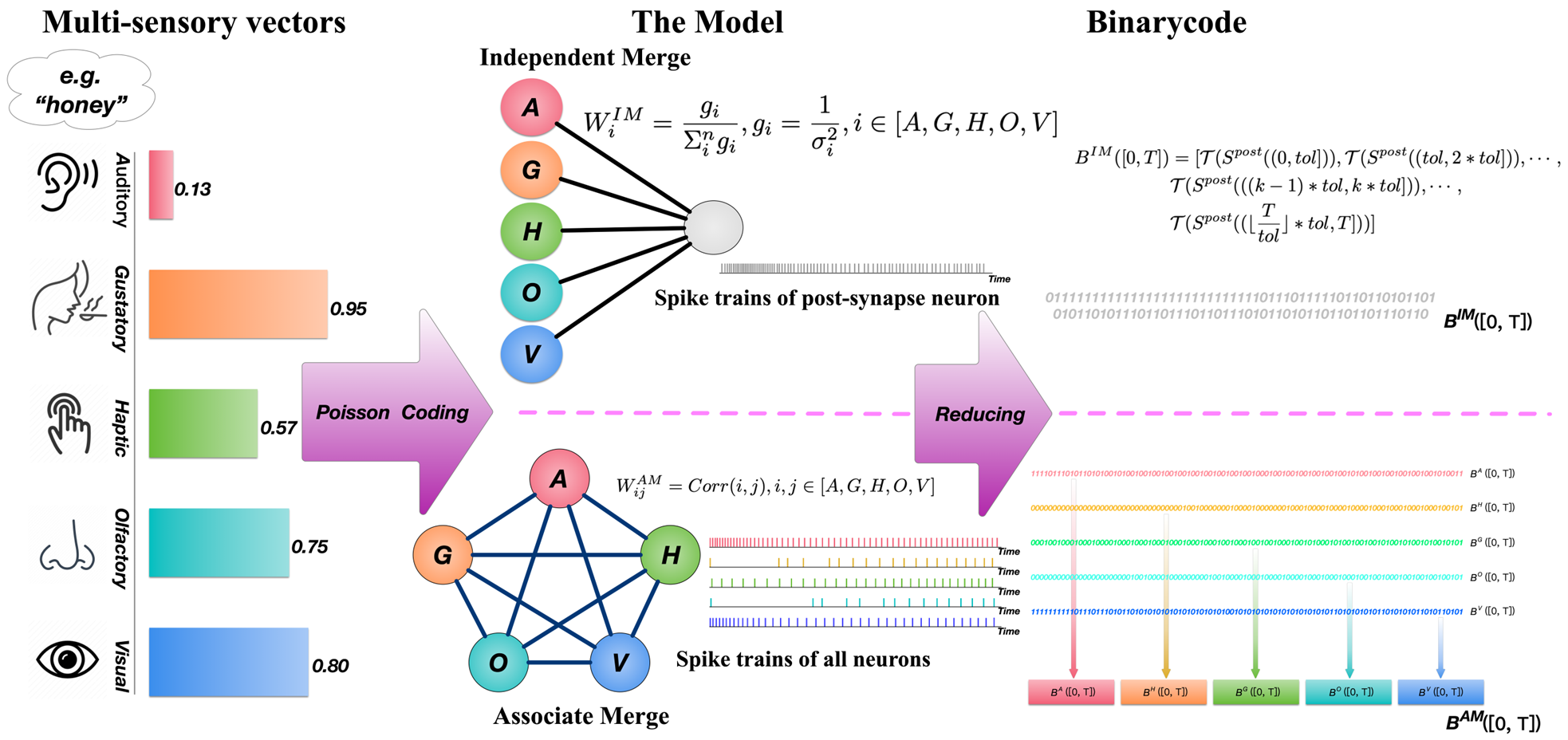

We present a multisensory concept learning framework based on SNNs with Braincog. The model’s input is a multisensory vector labeled by cognitive psychologists, with an integrated vector as the output following SNNs integration.

In MultisensoryIntegrationDEMO_AM.py and MultisensoryIntegrationDEMO_AM.py, we implement the SNNs based multisensory integration framework. To load the dataset, preprocess it and get the weights with the function get_concept_datase_dic_and_initial_weights_lst(). We use IMNet or AMNet to describe the structure of the IM/AM model. For presynaptic neuron, we use the function convert_vec_into_spike_trains() to generate the spike trains.

While for postsynaptic neuron, we use the function reducing_tol_binarycode() to get the multisensory integrated output for each concept. And tol is the only parameter.

In measure_and_visualization.py, we will measure and visualize the results.

Some details of the framework are shown below:

Load Multisensory Dataset

When implement the framework in BrainCog, we use the famous multisensory dataset–BBSR(brain-based componential semantic representation). We focus on the effects of five types of senses in this experiment: vision, touch, sound, smell, and taste and normalize the data and use the average value of the sub-dimensions corresponding to these five senses in BBSR.

Some examples are as follows:

| Concept | Visual | Somatic | Audiation | Taste | Smell |

|---|---|---|---|---|---|

| advantage | 0.213333333 | 0.032 | 0 | 0 | 0 |

| arm | 2.5111112 | 2.2733334 | 0.133333286 | 0.233333 | 0.4 |

| ball | 1.9580246 | 2.3111112 | 0.523809429 | 0.185185 | 0.111111 |

| baseball | 2.2714286 | 2.6071428 | 0.352040714 | 0.071429 | 0.392857 |

| bee | 2.795698933 | 2.4129034 | 2.096774286 | 0.290323 | 0.419355 |

| beer | 1.4866666 | 2.2533334 | 0.190476286 | 5.8 | 4.6 |

| bird | 2.7632184 | 2.027586 | 3.064039286 | 1.068966 | 0.517241 |

| car | 2.521839133 | 2.9517244 | 2.216748857 | 0 | 2.206897 |

| foot | 2.664444533 | 2.58 | 0.380952429 | 0.433333 | 3 |

| honey | 1.757142867 | 2.3214286 | 0.015306143 | 5.642857 | 4.535714 |

Input Encoding

We encode the multisensory inputs with Poisson coding:

def convert_vec_into_spike_trains(each_concept_vec):

tmp = torch.tensor ([each_concept_vec * time])

rates = tmp.view (time, -1)

vec_spike_trains = torch.bernoulli (rates).byte ()

vec_spike_trains = torch.tensor (vec_spike_trains, dtype=torch.float)

return vec_spike_trains

The Model

Since there is no biological study to show whether the information of multiple senses is independent or associated before integration, two different paradigms: Independent Merge (IM) and Associate Merge (AM) are designed in our work. The types of inputs and outputs are the same for both paradigms, but the architecture of SNNs is different.

The AM paradigm assumes that the information for each modality of the concept is associate before integration. It’s a five-neuron spiking neural network model, with five neurons corresponding to the stimuli of the concept’s five separate modal information. They are connected to one another, and there are no self-connections. We record the spiking trains of all neurons and transform them into integrated vectors for the concept.

class AMNet(nn.Module):

def __init__(self,

in_features: int,

out_features: int,

givenWeights,

bias=False,

node=LIFNode,

threshold=5,

tau=0.1):

super().__init__()

if node is None:

raise TypeError

self.fc = nn.Linear(in_features=in_features,

out_features=out_features, bias=bias)

self.fc.weight = Parameter(givenWeights)

self.node = node(threshold, tau)

def forward(self, x):

x = torch.tensor(x, dtype=torch.float)

x = self.fc(x)

x = self.node(x)

return x

The IM paradigm is founded on the commonly used cognitive psychology assumption that information for each modality of the concept is independent before integration. It is a two-layer spiking neural network model, with five neurons corresponding to the stimuli of the concept’s five separate modal information in the first layer, and a neuron reflecting the neural state after multisensory integration in the second layer. We record the spiking trains of the postsynaptic neuron and transform them into integrated vectors for the concept.

class IMNet(nn.Module):

def __init__(self,

in_features: int,

out_features: int,

givenWeights,

bias=False,

node=LIFNode,

threshold=5,

tau=0.1):

super().__init__()

if node is None:

raise TypeError

self.fc = nn.Linear(in_features=in_features,

out_features=out_features, bias=bias)

self.fc.weight = Parameter(givenWeights)

self.node = node(threshold, tau)

def forward(self, x):

x = torch.tensor(x, dtype=torch.float)

x = self.fc(x)

x = self.node(x)

return x

Get Multisensory Integrated Outputs

To get the multisensory integrated output for each concept. And tolerance is the only parameter.

def reducing_to_binarycode(post_neuron_states_lst, tolerance):

post_neuron_states_lst = [int (i) for i in post_neuron_states_lst]

if len (post_neuron_states_lst) % tolerance != 0:

placeholder = [0] * (tolerance - len (post_neuron_states_lst) % tolerance)

post_neuron_states_lst_with_placeholder = post_neuron_states_lst + placeholder

else:

post_neuron_states_lst_with_placeholder = post_neuron_states_lst

post_neuron_states_lst_with_placeholder = np.array (post_neuron_states_lst_with_placeholder).reshape (-1, tolerance)

binarycode = ""

for sub_arr in post_neuron_states_lst_with_placeholder:

if 1.0 in sub_arr:

binarycode += "1"

else:

binarycode += "0"

return binarycode

How to Run

To get the multisensory integrated vectors:

python MultisensoryIntegrationDEMO_AM.py

python MultisensoryIntegrationDEMO_IM.py

To measure and analysis the vectors:

python measure_and_visualization.py

Our framework is a pioneering work in the field of concept learning with SNNs and provides a solid foundation for modeling human-like concept learning. And the source code of our framework is in

https://github.com/BrainCog-X/Brain-Cog/tree/main/examples/Perception_and_Learning/MultisensoryIntegration

If you find the framework useful in your work, please cite this source:

@article{wang2022multisensory,

title={Multisensory Concept Learning Framework Based on Spiking Neural Networks},

author={Wang, Yuwei and Zeng, Yi},

journal={Frontiers in Systems Neuroscience},

volume={16},

year={2022},

publisher={Frontiers Media SA}

}